.webp)

AI Reliability: Preparing for a Successful GenAI App Launch

By 2030, artificial intelligence is expected to add a staggering $15.7 trillion to the global economy, transforming industries from healthcare to finance. But as AI becomes more fundamental to our world, we ask one critical question: Can we trust it?

Generative AI (GenAI) is set to drive transformative innovation, from enhancing customer experiences to more efficient operations. But in practice, the reliability of AI will be critical in ensuring there are no risks of misinformation, ethical breaches, or operational failures. The reliability of AI will decide whether it’s a powerful asset or a liability.

In fact, 80% of enterprises realize measurable performance gains with reliable AI models. On the other hand, according to a 2023 AI risk report by Gartner, GenAI poses a risk of between 56% and 67% to businesses.

This article discusses how reliability is the bedrock of AI success and provides actionable strategies for a smooth AI app launch.

What is AI Reliability?

For an AI application to be reliable, it must consistently produce results that are factually accurate, unbiased, and contextually appropriate, while adhering to ethical standards.

Unlike classic software applications, which operate deterministically (producing the same output for the same input), AI is inherently non-deterministic. In other words, the same prompt given to an AI model may return different results. While this variability drives creativity and innovation, it also makes ensuring reliability a complex challenge.

Establishing reliability in AI requires a careful evaluation of several factors. In the following sections, we’ll explore the key considerations you should address before launching an AI application.

Source: ksolves

Diverse Criteria of Trustworthiness

The reliability of AI is built on multiple dimensions, including accuracy, robustness, bias mitigation, and compliance to ethical and legal standards. These criteria play varying roles across industries, reflecting the unique demands of each sector. For instance:

- Accuracy is paramount in fields like healthcare, where a single error could impact lives.

- Robustness is critical in finance, where systems must perform consistently under dynamic market conditions.

- Bias Mitigation is especially vital in hiring or legal contexts, where fairness and inclusivity are non-negotiable.

- Ethical and Legal Compliance ensures alignment with regulatory frameworks, protecting organizations from reputational or legal risks.

By addressing these diverse criteria, businesses can build AI systems that meet enterprise-grade requirements, inspire trust, and accelerate adoption across a range of use cases.

Hallucinations and Misinformation

One persistent challenge in ensuring the reliability of AI is managing hallucinations—when GenAI confidently generates false or nonsensical information. For instance, a chatbot might fabricate non-existent laws, misleading users in high-stakes situations.

According to Gartner, unchecked hallucinations can severely erode user trust and expose businesses to significant legal and reputational risks. The consequences range from operational inefficiencies to damaged brand credibility - pitfalls no organization can afford to overlook. .

How Reliability Affects GenAI App Launches

GenAI unreliability can derail app launches, causing delays, user dissatisfaction, or even regulatory penalties. A recent example highlights the risks: Air Canada faced legal consequences when a chatbot provided inaccurate information about bereavement fares. The misinformation led to a Tribunal in Canada’s small claims court awarding a passenger $812.02 in damages and court fees.

This incident underscores a critical point: reliability is not optional. From maintaining user trust to avoiding legal penalties, businesses must prioritize robust testing and clear oversight to ensure GenAI systems perform as intended, especially in sensitive contexts.

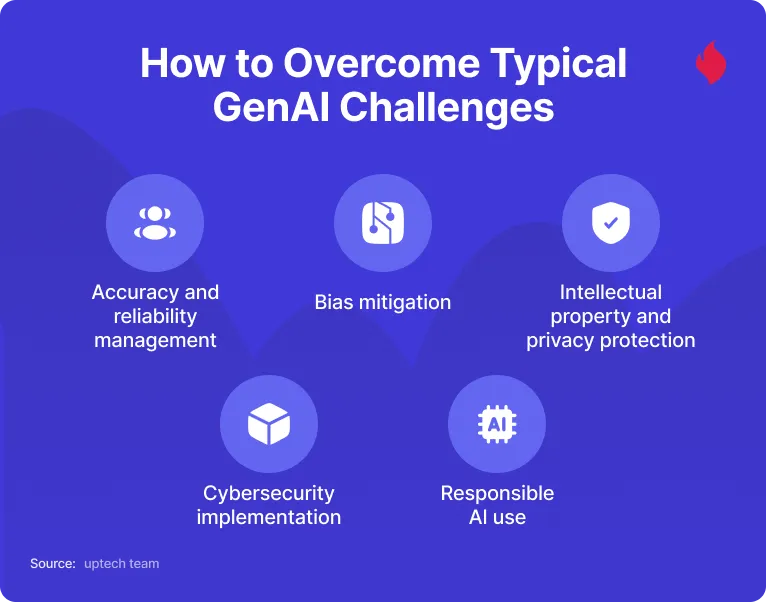

Common Challenges in Ensuring AI Reliability for GenAI

Challenges must be properly addressed to make the AI reliable in GenAI systems. Without proactive strategies; such hurdles may obstruct widespread adoption and affect the outcomes adversely. So, what are some of the challenges to overcome?

Source: uptech team

Challenges in Measuring Model Performance in Production

AI models are trained on specific datasets but face diverse and unpredictable scenarios in real-world use. A key challenge lies in identifying the right metrics to assess LLM performance. While metrics like ROUGE and BLEU work well for text generation, they often fail to capture nuances like instruction following, relevance, topic adherence, and factual accuracy.

This gap between controlled training environments and real-world applications makes it difficult to accurately measure and improve AI model performance. To bridge the gap, we need to develop more sophisticated evaluation methods that consider the multifaceted nature of language and the diverse range of tasks LLMs are designed to perform.

Unpredictable Impact of Model Changes

Even minor changes to models or prompts can give rise to enormous and unexpected shifts in performance, making it very difficult to predict this "butterfly effect." This is probably what led to the intense backlash over diversity in Gemini’s now-halted Gemini AI image generator.

Likewise, refining prompts for clarity may lead to unintended information loss, making refinement a rather precarious balancing act.

Unexpected User Input

Users often find creative ways to challenge AI by asking ambiguous questions, making insults, or providing incomplete input. Microsoft learned this lesson the hard way in 2016 when its AI chatbot, Tay, was manipulated by users to reel out racist and offensive remarks.

Since it is almost impossible to anticipate every possible user interaction, AI systems will always require ongoing tuning and robust failsafes to handle unexpected input.

Addressing Bias and Fairness

Bias in GenAI systems continues to be a concern. Models trained on imbalanced datasets can perpetuate harmful stereotypes, such as favoring certain cultures while misrepresenting others. In fact, according to a recent report, 72% of executives trust in all forms of GenAI, but only 36% actually take action to measure workers’ engagement- an essential step in promoting inclusivity. The presence of bias can lead users to avoid AI altogether, especially when its inherent bias is obvious. Addressing this issue is not just a matter of ethics; it's essential for ensuring widespread adoption and trust.

Difficulty in Handling Real-World Complexity

GenAI can struggle with complex multimodal inputs, especially when combining diverse data types like images and text. This is why high-stake environments, like financial forecasting and medical imaging, require strict safeguards to handle complexities for reliable results.

Ethical and Legal Challenges

Compliance frameworks are crucial for AI reliability, especially in sensitive industries like law and healthcare. Some critical concerns include safeguarding client data, securing workflows, and adapting to newly arising AI regulations. These call for a balance between efficiency and ethics.

Regulatory Developments

Ensuring AI reliability requires alignment with evolving regulations across global jurisdictions. These frameworks are designed to address safety, ethical concerns, and the responsible deployment of AI technologies. Let’s explore key initiatives shaping the regulatory landscape.

European Union

The European Union introduced the AI Act on August 1, 2024. This groundbreaking regulation aims to ensure AI systems are safe, ethical, and respect fundamental rights. The regulation promotes risk management, accountability, and transparency, the AI Act sets a global standard for responsible AI development. Companies operating within the EU or targeting EU markets must prioritize compliance with the AI Act to avoid hefty fines and reputational damage.

Singapore

The Singapore government introduced AI Security Guidelines on October 15, 2024, to address growing cybersecurity concerns in AI systems. By focusing on identifying vulnerabilities, ensuring data integrity, and building trust, Singapore aims to foster responsible AI development and adoption.

This is especially important for enterprises handling sensitive data, as it helps mitigate risks and maintain public confidence. By adhering to these guidelines, companies can demonstrate their commitment to ethical and secure AI practices, potentially gaining a competitive edge and avoiding costly breaches.

China

China introduced its Artificial Intelligence Security Governance Framework on September 9, 2024. This framework emphasizes the importance of AI safety and ethical use, emphasizing risk management strategies to mitigate potential harm. For businesses operating in China or developing AI solutions for the Chinese market, understanding and adhering to these regulations is crucial to avoid legal repercussions and ensure the responsible deployment of AI technologies.

Hong Kong

In August 2024, Hong Kong introduced guiding principles for integrating GenAI into customer-facing financial services. These guidelines prioritize data privacy and require AI transparency and reliability, especially in high-risk areas like customer support and financial advice.

Non-compliance could lead to hefty fines and damage to brand reputation. By following these guidelines, companies can ensure ethical and responsible AI usage, fostering trust with customers and regulators alike.

These regulatory efforts demonstrate a shared global commitment to safeguarding AI reliability, inspiring organizations to prioritize compliance as they innovate. Staying informed and adaptive ensures businesses navigate these frameworks while leveraging AI’s full potential.

Testing and Benchmarking

To ensure AI reliability, there must be a clear benchmark for testing, especially since GenAI is used under various conditions. Without such universal protocols, users may find it difficult to assess the safety and reliability of AI systems, particularly in high-stakes industries like healthcare, finance, and transportation.

Building trust in AI systems requires an end-to-end process. Companies must consider not just technical performance but ethical considerations and real-world adaptability. In critical applications of GenAI, errors or unexpected outcomes can erode user confidence, affecting brand reputation. In fact, 83% of businesses seeking to deploy AI say they would love to be able to explain how their AI reached a decision.

The development of comprehensive benchmarks is essential for assessing both performance and safety. These benchmarks should include diverse metrics, from accuracy and robustness to bias mitigation and compliance.

Business Implementation

Building an innovation roadmap that prioritizes AI reliability ensures long-term performance gains. It, however, requires a strategy that maintains a balance between innovations and risk management. At the core of success are advanced techniques, big security protocols, and continuous performance optimization each ensures AI reliability at every step. Let’s consider some of the key elements:

- Building with Retrieval-Augmented Generation (RAG)

Retrieval-Augmented Generation (RAG) enhances AI reliability by integrating large language models with real-time knowledge bases. This ensures the AI provides accurate, up-to-date, and fact-based responses, reducing the risk of hallucinations.

In a well-cited paper, Patrice Béchard and Orlando Marquez Ayala, two renowned applied research scientists working on LLMs, demonstrated how RAG minimizes hallucinations and enables models to generalize effectively to out of domain scenarios.

For businesses, RAG offers transformative potential. For example, customer service bots can access real-time updates to company policies, ensuring responses are both relevant and reliable. By leveraging RAG, organizations can enhance their AI systems' performance and maintain trust with users.

- Implementing LLM Security Measures

When it comes to using large language models (LLMs), data safety is non-negotiable. Since LLMs often interact with confidential, proprietary, or sensitive information, implementing robust security measures is critical.

To minimize risks, organizations should prioritize:

- Encryption Methods: Secure data during transmission and storage.

- Differential Privacy: Protect individual data points while leveraging aggregate insights.

- Access Controls: Limit and monitor who can access the system and sensitive information.

By implementing these measures, businesses can ensure the safe and responsible deployment of LLMs, building trust with users and stakeholders alike.

- Optimizing Performance Through Prompt Engineering

Prompt engineering plays a significant role in enhancing AI reliability for practical applications. Well-set prompts guide AI systems to provide contextually accurate, efficient responses. For example, optimizing chatbot prompts to encourage concise answers not only boosts user satisfaction but also reduces operational overhead by minimizing resource use. With thoughtful prompt design, businesses can ensure their AI systems perform reliably while meeting user expectations.

Best Practices for a Successful GenAI App Launch

Launching a GenAI app doesn’t have to be rocket science; just ensure that it prioritizes AI reliability through structured workflows, continuous optimization, and collaborative monitoring across teams to ensure continuous improvements. Much can be learned from the Scale GenAI Platform Data Flywheel, which emphasizes iterative refinement across four key stages:

- Data Generation:

Leverage the data pools of your organization. Do this by laying down a foundation for high-quality datasets through evaluation engines and prompt optimization. Also, collaboration between subject matter experts (SMEs) ensures that the training data reflects real-world scenarios, improving AI reliability.

- Application Improvement:

Refining prompts, models, and integrations continuously. Post-launch, your app should undergo consistent optimization to align with evolving user needs. This iterative process includes releasing candidates, analyzing performance, and updating models to maximize reliability in diverse use cases.

- Evaluation:

Comprehensive evaluations help pinpoint failure points. Continuous regression testing and the use of performance metrics allow you to identify gaps proactively. Encourage users to provide honest and constructive reviews, which further builds their trust and confidence.

- Production Monitoring:

Monitor AI reliability in dynamic environments with a focus on user feedback, online evaluation tools, and custom triggers to pinpoint issues early. A robust feedback loop between monitoring and data generation creates a system of sustained improvement.

Without a cohesive architecture connecting these elements, AI reliability may degrade, undermining long-term trust and usability. Each step – data generation, application improvement, evaluation, and production monitoring – must work cohesively and feed into the next for exponential performance gains.

Protect Your Brand With Qualifire

Ready to launch a GenAI app that sets the standard for reliability? Simplify the process and gain peace of mind by integrating Qualifire into your workflow.

Qualifire is a real-time AI safeguard that is designed to ensure your GenAI apps deliver exactly as intended. It acts as a firewall, blocking errors like hallucinations, biases, and policy violations with unmatched 99.6% accuracy. Click here to start your free trial immediately!

AI Reliability: Preparing for a Successful GenAI App Launch

.png)

%202.svg)